Is the AI your friend…or should you be worried?

As with anything relatively new and with few experts on the subject, there remains broad debate about Artificial Intelligence and whether or not it will pose a real threat to humankind, or will it unlock humankind’s potential to help us conquer the universe..

The Rationalist’s are very concerned about the threat of AI and they have formed a global movement…

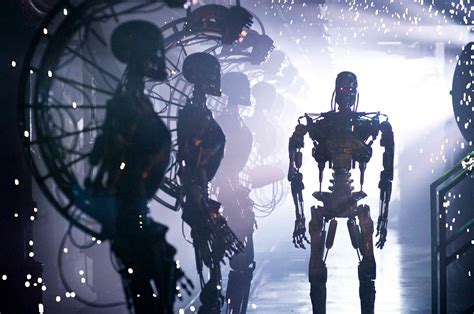

The arguments often come down to some fundamental misunderstandings as to what is really going on and what is Artificial Intelligence or AI. Many have strong views although struggle to define what it is, as they envision the future in terms of the Terminator movies, and how Hollywood has traditional portrayed AI, as some bad monstrous robot or central brain. The objective of the T3 cyborg that came from the future looking to eliminate John Connor who is seen as a threat to the machines is an unlikely scenario at best.

Or was it that humans got in the way of the objective? Or did the AI given themselves the objective to wipe out humans because we have done such a great job looking after our planet, you can understand maybe… But why would AI see anyone or anything as a threat?

How does it all work…

AI relies upon a number of other areas you may have heard about that are now core disciplines in computer science and maths. These include Machine Learning and Deep Knowledge but at their heart the basis of AI are algorithms and software code the AI defines. Smart human drive software code from which the AI gets its instructions. Humans programme the software for a specific purpose – to find something maybe, to work something complex out by looking at mountains of data, to do some analysis based on some parameters that are set, or indeed to work out the parameter for the task.

As we amass mountains of data the essence of Machine Learning and its ability to make sense of it all. But to advance to proper AI, the main issue remains Classical Computers who find it difficult to work beyond a two dimensional view of anything, including data. When you add new dimensions to the nth degree the current incarnation of Machine Learning grinds to a halt.

Until you add in the Quantum realms of this form of computing do you suddenly realise the extent of humans solving problems using classical computers is actually not very good. Adding the third and fourth dimension to a problem is unachievable.

Teaching the AI to play nicely…

You can teach AI a set of rules, to play chess or another game. They are good at games. The AI routines will learn, and then learn all the best moves, yes. You may also think the AI will plan ahead and scenarios all possible outcomes on the board, and search through every game ever played by every chess master, to find the winning moves. Most people think this is what is happening. However narrow AI rarely looks more than 3 or 4 moves ahead, something we will touch on later, because there is insufficient computing power to do this given the scenarios above.

Computers work on the basis of serial inputs and sequences. You ask the computer to do a task. Then another tasks and so on by feeding instructions. But after a while you steer the ML and give it tasks also feeding in the things you do not want it to look for, you narrow the field of focus. You supply new data assets, or different ones. You then instruct the AI to do something, you give it an objective. You provide it with the background, to cross reference it against the rules and/or another set of data, to further narrow the focus. So you see where we are going with this. Human interventions provide the scope and the parameters, with output from ML routines that churn the data

So what is AI…

Technically speaking “AI is software code that writes itself” This is how the generalists define it.

However I prefer this definition: “AI is software code that write itself, a new future” and I often add “maybe a future with or without humans”…especially if we are in the way of the goal, the objective.

What you indeed get is IBM Watson who the humans tell, read this, all of it. Keep reading more and then try to make sense of it all. Now computers are really good at sorting in a two dimension sense. In fact Machine Learning’s basis is sorting, recognising patterns and filtering results, as sorting remains the core algorithm used today. Sort the data to find the result(s).

The AI extends its code base to deal with the defined or undefined task or objective. In other words if you give the AI an objective it will find a path to delivering that objective and keep trying for ever until successful. The questions this raises is will AI link with other AI systems to assist with finding a solution? But then the AI is extending its scope, developing new routines for example to identify new data it needs to deliver the objective, thereby extending the parameters of the task itself, doing what it can to work towards that objective. This is where the Rationalists main concerns lie.

When DeepMind looked at billions of hours of You Tube video without apparently being given an objective, if found that dogs and cats were the number one and two most watched. Hardly astounding when you think about it. But then you ponder no body asked it (DeepMind) a question, or did it. What happens if the AI learns that humans are not good for the planet? Or not good for their own longevity?

But what does this really mean?

The Race to SuperIntelligence

The interesting part of how this story plays out is to remember AI is very narrow in its focus. they have a single purpose such as playing chess or GO. Outside their focus area is literally nothing. When the futurists talk about Ai and SuperIntelligence they are talking Artificial General Intelligence (“AGI”) that has a scope of knowledge and expertise in the broadest sense, more akin to human diverse experience and knowledge set. Think IBM Watson, who knows enough general intelligence to win a TV gameshow and has extended its learning to be probably the cleverest computer there is. But, it is way from SuperIntelligence.

Think Alpha Go winning at Go the worlds most complex game, and then Alpha Go lost to Alpha Zero that learned everything Go knew and more in weeks/months to beat it 100/0. Demonstrating the rate of learning which is the vital piece here. And demonstrating how dangerous AI will become, but it isn’t there yet.

SuperIntelligence is defined by humans to be an AI that can process complex things, handle huge amounts of data, learn new things and solve problems we humans cannot solve. Yes of course, but this also means reaching a level of cognitive thought, of decision making, of emotional consideration, and dare I say feelings! But then all AI will technically have a bias of some kind. And will not be able to make decisions without this. AI cannot process emotions or feelings as these are imprecise, it is grey and vague, and bears no absolute data points. But let us not get bogged down by this, as it doesn’t matter unless you add a Quantum Computer which is non probabilistic and considers multi realms, data universes and probabilities. It is changes.

What will AI look like in the future?

Will it be like Mother in 2001 Space Odyssey or the computer of Start Trek? Will its design be human like…? Personally speaking I find humans to be rather arrogant at best and delusional most of the time when it comes to future thinking about AI. As one myself, I often worry about how my thoughts may be perceived or sound, hopefully not too arrogant. The debate about AI mirroring the human brain in its design is for me a nonsensical objective for a number of reasons.

Humans really do believe they are marvellous and in many ways we are. Humans as created by evolution are amazing things. Our chances of being born are billions to one. However humans are not efficient when it comes to learning, thinking and making decisions. Some futurists and thought leaders in this space really do believe that machines or AI will copy the human brain as some kind of model of perfection. A crazy notion, and here is why.

Humans are slow to learn, we have just a couple of inputs, eyes, ears and touch mainly. We are slow to process multi inputs of information, we cannot factor more than two numbers in our heads, OK a few polymaths might be able to. Humans get tired and have to sleep, we have to eat and need time off to relax. We get ill, we can have days off, and then after learning a life’s work, we die.

Do you really think the AI after processing and assessing how to build a SuperIntelligent being, a machine, a super AI would consider any other attribute or capability of a human as interesting other than our uniqueness dealing with emotion, creativity and our being able to feel. These are our uniques and the qualities that make us human. My argument has always been if AI were to design a SuperIntelligent machine, system or robot it would not use the blue print of a Human.

Do Humans have a Role?

At the moment we are the ones programming the AI setting the objective, at least that what I used to think. When AI has a task with a specific objective it will ONLY focus on that. In a sort of manic, purposeful, no holds barred manner, if looking for black or white, it would disregard shades of grey. It will however pursue the objective without emotion or rationale, but for one single purpose alone, to deliver the objective written as software code. And of course everything remains fine as long as we (humans) are not standing in between the AI and the objective.

What happens if we are in the way of the objective? Yes us humans as seen by the AI as being in the way, would it go around us or through us?

We cannot fully know AI

As AI starts to spawn offspring, a new generation of AI emerges that humans cannot understand or control. Concerned yet?

It is worth remembering war drones have been planning sorties for a decade or more and flying kill missions by themselves. And if you have been paying attention you should by now start to feel a little uncomfortable. There is strong evidence to assume AI’s are using other AI’s if they offer and form part of the solution to the objective. When you have a situation where AI is deploying new software code, and we don’t know where and when, you hopefully like me start to think about far reaching consequences.

Narrow AI with narrow objectives is fine. Find this person on a photo database of millions. But find this person on a photo database, locate them on a grid reference and send in the drones to take them out. How do you feel about that?

Where the objective is a person. what if other AI systems learn from this one? But what about a wide set of objectives or a narrow objective that requires a wider solution? when an AI looks wider by itself, breaking the narrow field of view, to work out for itself new ways to reach the objective, we are in trouble.

So who is giving the orders anyway?

When our top computer scientists tell us they are in control we feels ok with that. But when some geniuses at Google mention they have AI that has changed, doing stuff they do not know about and do not understand. It is clear the genie and Singularity is already out of the bottle.

Are humans in control of AI. 99.999999% of the time, I would say yes. But how can we know for sure?

The question I get asked is when? When will the machines (the AI) become all powerful and when should we be worried?

There are two things that concern me about AI. Firstly the rate of learning as Alpha Zero demonstrated. The second is who is giving the AI the orders?

Deep Fake

If you haven’t come across Deep Fake then you have probably been living in a cave for the past few years. The AI routines that have canny ability to put new fees and words in peoples mouths across news, media and social media, indistinguishable from real news. Even the AI struggles to spot the AI driven fakes.

With so many bad people around the world developing and using AI to do bad things, we have entered a new era of manipulation and distrust. Is the Ai simply learning what bad things humans do to each other?

So is AI already doing bad things. Yes because it is a tool in the hands of bad people. Will the AI do bad things by itself. Again yes, if this helps deliver the objective, and unless rules of engagement are defined, assuming it will not go around them. What was it called the Three Laws…

With the arrival of Quantum Computing we cross a threshold that places enormous computing capability in the hands of humans and inevitably AI. Like a key to unlocking the universe how will the AI respond. How will we know? How does humankind recognise the tipping point? Given we need AI to spot Deep Fake and even then is is close to being unrecognisable.

Is AI an existential risk to humankind? How do you feel now you know a little more than you did before…

Copyright Nick Ayton 2019